Training Course: The best neural Networks (AI) for improving, creating and editing images and videos [from scratch to Prof. level] from CGBandit

Due to the expansion of the program with additional materials, the course description was updated on November 19.

The online training course is suitable for the widest possible range of users, but it will be especially valuable for 3D visualizers, designers, architects, AI creators, anyone who runs social networks, bloggers, photographers, 2D and 3D artists, visual content creators, etc. The training program of the course is designed for absolute beginners for beginners starting from scratch, as well as for users who already have experience working with neural networks.

Hello, dear friends and students! I’m Valentin Kuznetsov. I am the head of the CGBandit educational project.

I will be glad to present and tell you about the training program of our new course: "The best

neural Networks (AI) for improving, creating and editing images

and videos".

After completing the training, you will gain the knowledge and skills to use the most powerful

networks for professional improvement, creation and editing of images and

videos.

Thanks to the knowledge provided in the course, you will have an impressive level of graphics provided by neural networks.

This page that you are currently reading is a description of the course, we recommend that you open it on computer monitors, as this will allow you to fully see the examples of "before and after" images, having seen all the details. On a phone, due to the small screen, many important details of the images will go unnoticed.

The course program is the result of selecting the best from a huge array of information and tools done by our team, which will save you a lot of time and effort searching for the right material among the information junk on the Internet.

Course content

- 1 DIRECTION - [AI Upscale & Enhancer] Improve the details and increase the resolution of images: photos, generations, 3D renderings, illustrations

- 2 DIRECTION - Video generation from image (Image to Video) using paid neural networks

- 3 DIRECTION - Enhancement of 3D models of people in your 3D visualizations

- 4 DIRECTION - Free image generation based on your sketches, drawings, photographs, 3D renderers and any images, using a control card, and, if necessary, with the addition of a reference using Stable Diffusion on SDXL, FLUX, etc. models

- 5 DIRECTION - INPAINT (insertion/inpainting) — a function for local image editing — adding objects to an image

- 6 DIRECTION - INPAINT - mask insertion using a control card, based on your sketches, drawings, images, 3D readers, etc. using the free Stable Diffusion on SDXL, FLUX, etc. models

- 7 DIRECTION - Creating images based on a text prompt [Promt] using free Stable Diffusion on SDXL, FLUX, and other paid neural networks

- 8 DIRECTION - Free image generation based on a REFERENCE, an example image using Stable Diffusion on SDXL, FLUX, etc. models.

AI Upscale & Enhancer by CGBandit

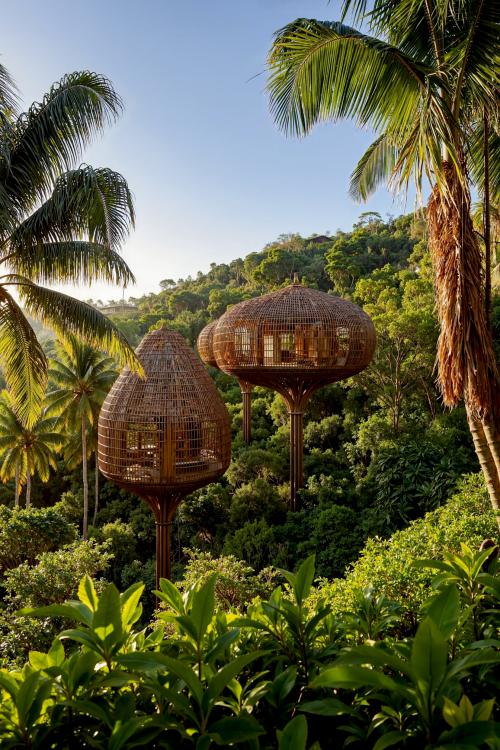

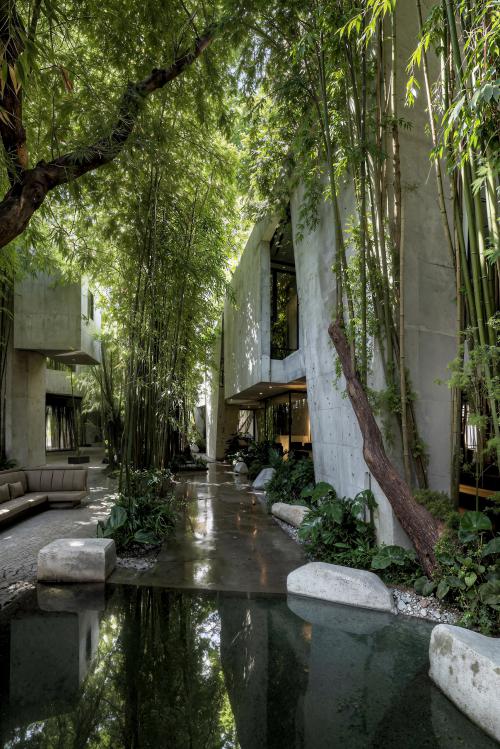

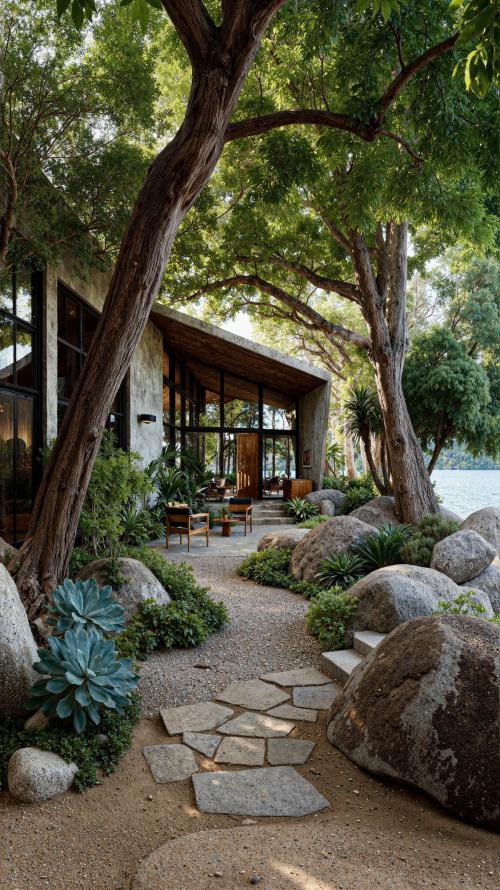

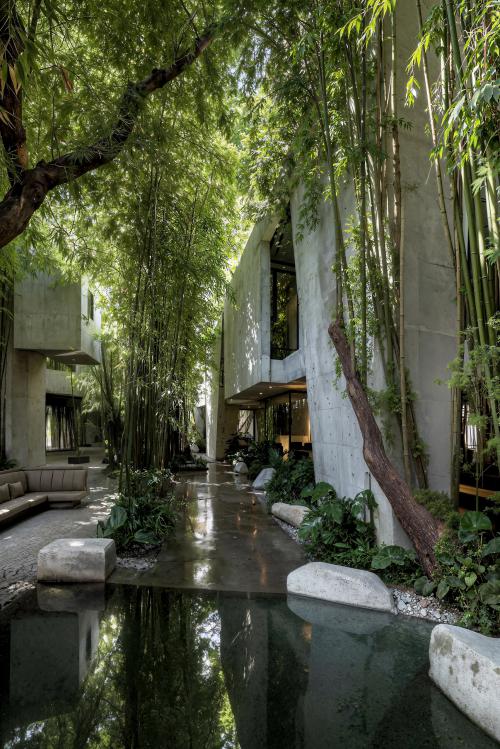

Improve the details and increase the resolution of images: photos, generations, 3D renderers, illustrations. The "AI Upscale & Enhancer" neural tool will allow you to increase image resolution with improved details, improving image quality, adding details, enhancing effect, improving chiaroscuro, texture quality, adding sharpness and clarity, working through each pixel and increasing the visual appeal of the image.

This is a tasty morsel for 3D and CG artists. Improve your 3D visualizations and increase the image resolution. Where the render engine falls short in terms of effectiveness and photorealism, the neural network will fill in the gaps, add detail and improve the light and shadow pattern, sculpt the volume, and work on those objects that are usually resource-intensive to perfect using pure 3D graphics: fuzzy carpets, plaids, people, plants, water, natural landscapes, and much more. Thanks to the workflow and access to neural networks that you will receive, you can significantly increase the photorealism of your 3D visualizations improving and detailing your renders.

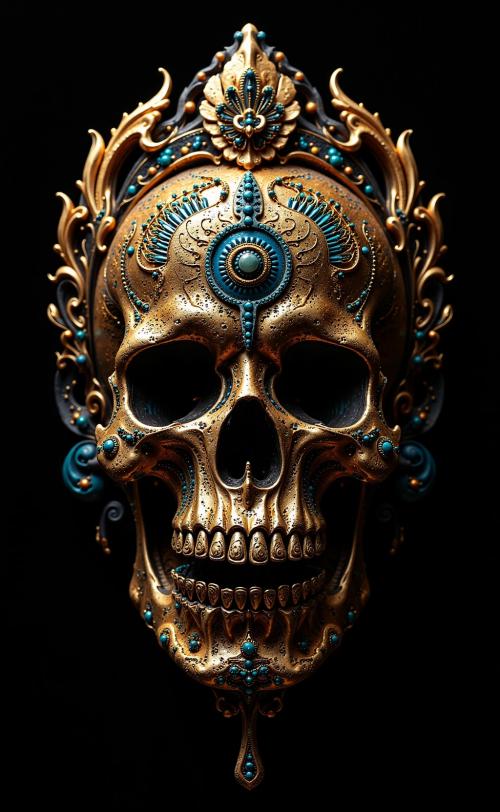

AI Upscale is more than just increasing the resolution of an image. In precision mode, it strives to preserve all materials, textures, and details. In creative mode, the tool transforms an image into a new piece: it completes textures, changes materials, adds details and makes the result even more expressive. Below is an example of using creative mode.

An example of the work of «AI Upscale & Enhancer»

Пример работы «AI Upscale & Enhancer» в увеличении разрешения и улучшении деталей изображения картины

ПРИМЕР

Video generation from image (Image to Video) using paid neural networks

We will teach you how to create PRO-level video flights using the best AI technologies to create video from image (Image to Video). You will save time and tens of thousands of rubles on tests and experiments by finding out which neural networks give the best results.

EXAMPLES (PRO LEVEL GRAPHICS) GENERATING VIDEOS THAT YOU WILL POSSESS AFTER COMPLETING THE COURSEEnhancement of 3D models of people in your 3D visualizations

Enhance 3D models of people to maximum photorealism in your 3D renders. Where the render engine falls short, AI technologies come to the rescue.

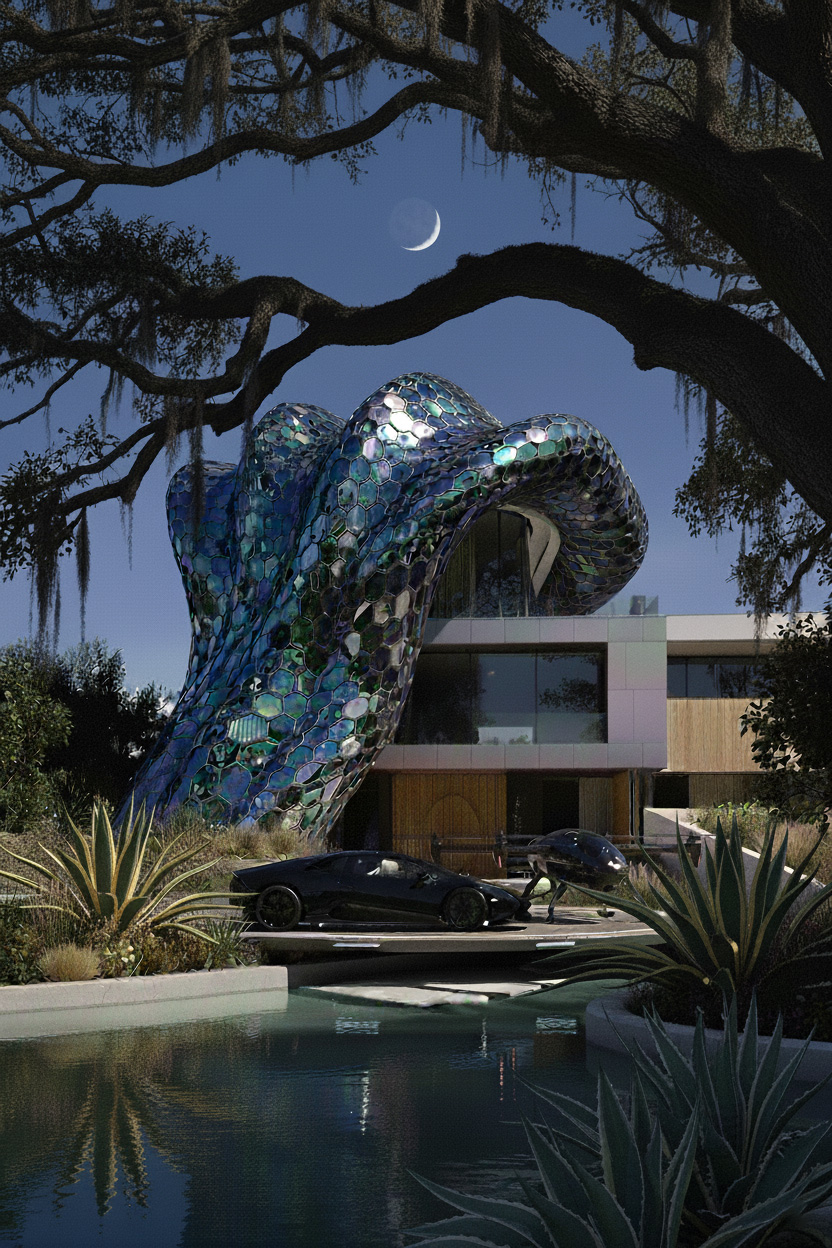

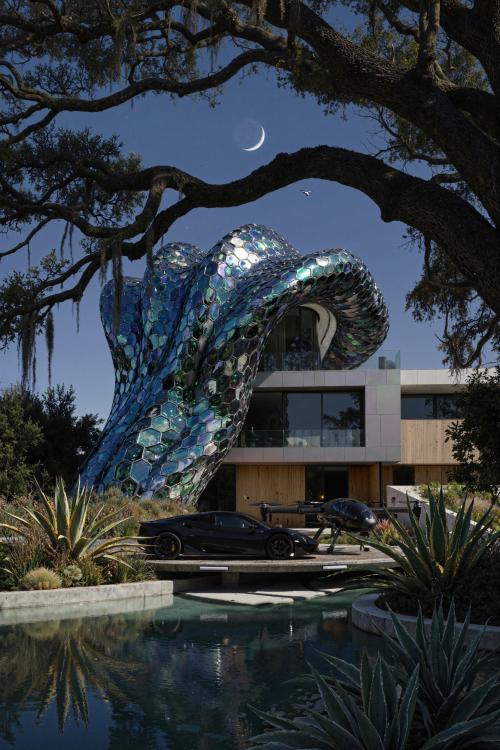

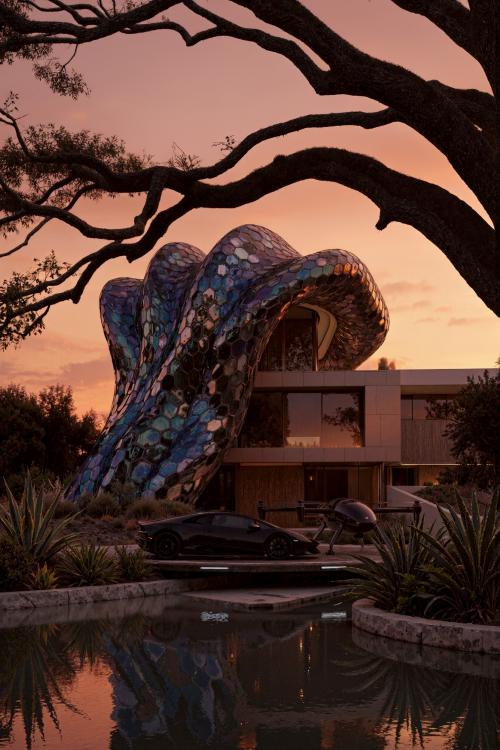

EXAMPLESImage generation based on your sketches, drawings, photographs, 3D renderers and any images, using a control card, and, if necessary, with the addition of a reference

EXAMPLES

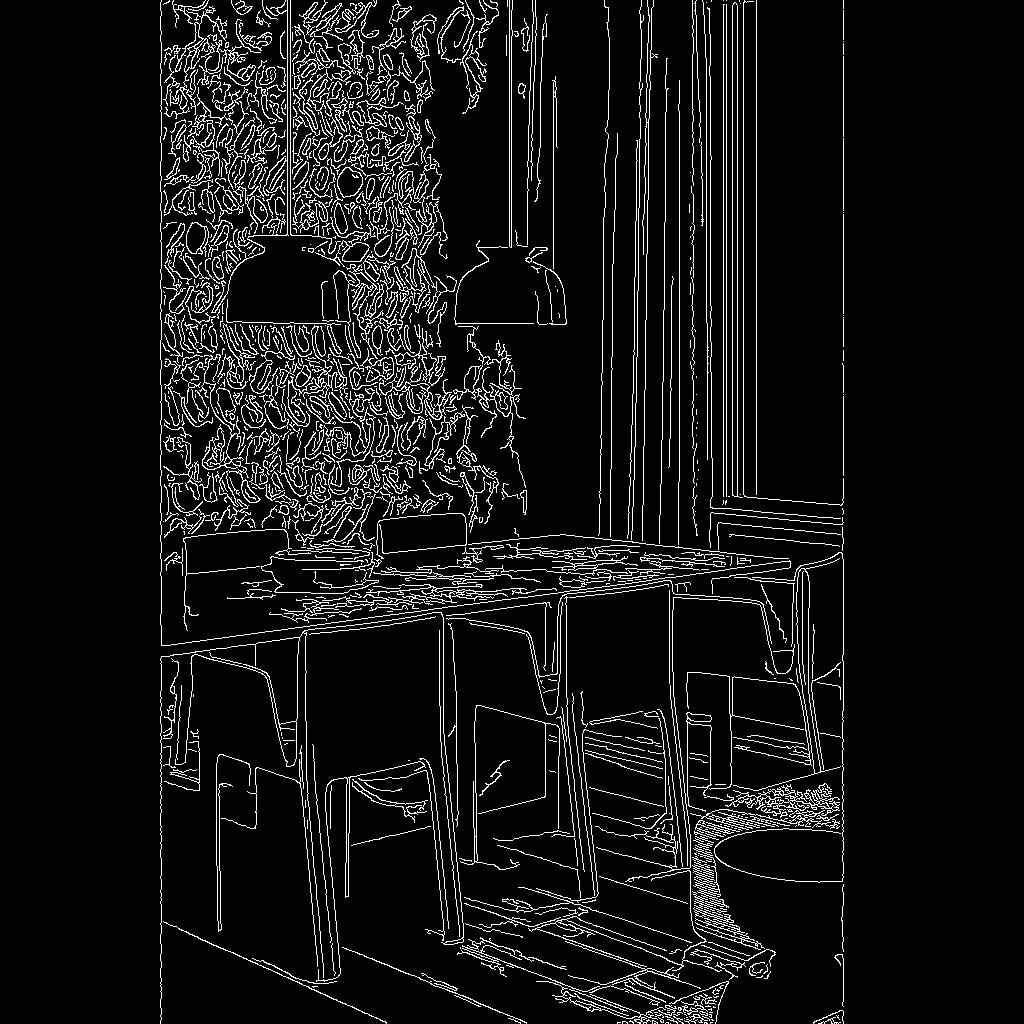

INPAINT (insertion / inpainting) — the function of local image editing — adding objects to an image by effect or reference

EXAMPLES

INPAINT - mask insertion using a control card, based on your sketches, drawings, images, 3D readers, etc.

EXAMPLESCreating images based on a text prompt [Promt] using free Stable Diffusion on SDXL, FLUX, and other paid neural networks

EXAMPLESCreate images based on text prompts — the neural network will convert your text-based description into a complete visual image. You formulate your idea with words, specifying details, style, atmosphere, color scheme, and even emotional components, etc., and the neural network turns these words into a unique visual result.

This approach has a number of key advantages:

- Control over content: you specify what you want to see in the image, including details of objects, background, composition, and style.

- Rapid prototyping and experimentation: you can create multiple versions of a single idea and test different styles and effects without spending time on manual drawing.

- Creating unique content: each image is generated individually by a neural network, making it exclusive and protected in terms of copyright. You become the author of original content and eliminate the risk of copyright infringement.

Using text prompts turns image generation into an interactive creative process, where your words become a tool and the neural network becomes your “virtual artist.”

Social media – create stunning photos, vivid illustrations, creative posts, visuals for stories and covers that will grab your audience's attention.

Website and blog design – fill your pages with stylish banners, background images, and unique graphic elements.

Menus and promotional materials – generate attractive photos of dishes, promotional posters or brochures that will make your business stand out from the competition.

Covers and presentations – create memorable images for books, podcasts, albums, slides, or commercial proposals.

Versatile solutions – from profile images and logos to full-fledged art illustrations for printing.

Image generation based on a REFERENCE

Generate images based on one or more references, orienting the created new image based on similarity with the reference in style, color, etc.

EXAMPLESThe online course includes:

- Access to training videos of the course on our online platform Nodsmap.com. You get round-the-clock access to the video materials of the course, the structure of the training program and related materials on our platform. Nodsmap.com if you have an internet connection. You can view video tutorials at any time convenient for you.

- Access to the opportunity to receive both paid and free updates on video tutorials in the course structure, as well as all important accompanying material, to eliminate the lag in the technological progress of AI technologies. Due to the rapid pace of development, it is unprofitable for us to lag behind in progress. Thus, thanks to our platform, on which the training material will be provided, you will be connected to a truly significant channel for the flow of current updates.

- An activation key for video lessons that allows you to link the course to one device.

- Bonus Photoshop tutorials.

The cost of the training course

Price: $320 [The course is now available to CGBClub members for free]

In order to become a member of CGBClub, as well as for all questions, write a personal message to the author, head of the educational project "CGBandit" Valentin Kuznetsov

Cost of the training course

Price: 570 USD (there is a 43% discount now) = 320 USD.

To purchase the course or to ask questions, please send a direct message to the author and director of the CGBandit educational project. Valentin Kuznetsov [contacts]

Curatorial support

Separate from access to the course, you can purchase our curatorial support either immediately or some time

after your purchase.

The price of curatorial support is $120 for two months. And if you need more attention and

assistance, you can always extend your support.